Deepseek (Over?)Hype Train Debrief

After AI-mageddon and NVIDIA falling by hundreds of billions, the Deepseek (over?)hype train is starting to run its course. Now that the noise is starting to die down, I wanted to review a few points largely overlooked by commentators.

The tl;dr of the event is that a Chinese company released a model that is a bit LESS than state-of-the-art but a) far more efficient than other models of similar quality and b) released as open-source download.

The most illustrative of this episode’s key takeaways is NVIDIA’s price change on the news. Breakthroughs on efficiency have been occurring relatively consistently for the last couple years, so why would the number one GPU maker crater on the Deepseek news—dropping nearly 20%—before recovering some.

The market isn’t “crazy” or “dumb.” Prices are driven by expectations. NVIDIA is the number one GPU provider, nearly exclusively so for high-end GPUs. GPUs power AI, at least for today. If the future has more GPUs, then it’s great news for NVIDIA. One way to think of NVIDIA’s demand is using a simple framework:

The demand for high-end GPUs, NVIDIA’s golden product, is primarily based on three pieces:

AI Demand – The more end users want AI in their devices, products, and tech stacks, the more GPUs will be needed.

Electricity $ – As the price of electricity rises, the more difficult for your AI ambitions to become economical. If the price of electricity falls, gimme more AI and the only limit is the number of GPUs!

AI Efficiency – AIs can run more efficiently in terms of electricity, produce more out of less sophisticated processors, or both. We’ll assume both for now.

Each of these three pieces can apply to training (creating the models) and inference (our everyday use of the models).

What changed with Deepseek?

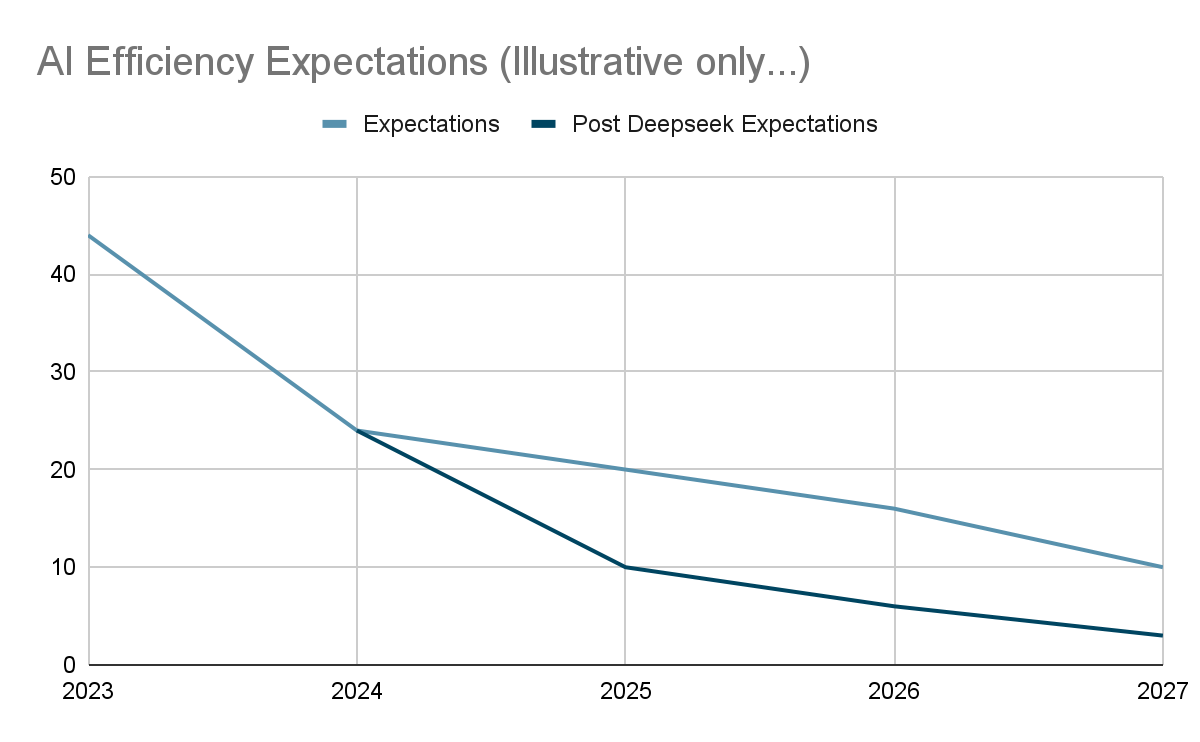

The top AI companies were on a steady track and becoming more efficient over time. However, Deepseek jumped forward with creative innovations to efficiency that were more significant than expectations. Specifically, not only did they become more efficient, but they also did so by expanding RAM usage (not NVIDIA) and reducing compute (NVIDIA).

OpenAI, xAI, Anthropic, Google, and Meta will adopt these techniques and keep marching forward. NVIDIA on the other hand? Ooph. Potentially we all need less of their product.

Is this a death knell for NVIDIA?

No, and the market doesn’t believe so either. However, if compute becomes sufficiently efficient and if it’s performed LOCALLY inside your computer with less state-of-the-art non-NVIDIA processors, it could signal a smaller future for NVIDIA.

What is the probability that a significant portion of AI inference compute moves locally? Higher now than a month ago.

However, there are two other significant factors that are still volatile: the cost of electricity and the demand for AI.

We have wild prediction ranges that differ by orders of magnitude from the most knowledgeable experts on the subject and the AI companies. Will demand be a quadrillion tokens per year, or a septillion tokens per year?

Every large technology company is pursuing running nuclear energy to support this infrastructure. Does it fall wildly short, and the price of electricity skyrockets, or does electricity become abundant and cheap?

Stock prices are based on expectations, but there is no consensus on expectations.

The bottom line: Deepseek’s release caused a shift in efficiency expectations specifically on compute, and the future demand for AI and GPUs is still highly uncertain.